Data Lake Architects design and implement scalable data storage solutions, enabling efficient management and processing of vast datasets within cloud or on-premises environments. They specialize in integrating various data sources, optimizing data ingestion pipelines, and ensuring data governance and security compliance across the data ecosystem. Proficiency in big data technologies such as Apache Hadoop, Spark, and AWS Lake Formation is essential to architect robust, high-performance data lakes that support advanced analytics and machine learning initiatives.

Individuals with strong analytical skills and a background in data management are likely well-suited for a Data Lake Architect role. Those who are comfortable working with complex data structures and have experience in cloud platforms and big data technologies probably find this job aligns with their expertise. However, candidates who prefer routine tasks and limited technical challenges may find the dynamic and evolving nature of this position less compatible with their preferences.

Qualification

Expertise in designing scalable, secure data lake architectures using platforms such as AWS Lake Formation, Azure Data Lake, or Google Cloud Storage is essential for a Data Lake Architect. Strong proficiency in big data technologies like Apache Hadoop, Spark, and Kafka, combined with experience in ETL processes, data governance, and metadata management, enhances a candidate's qualifications. A solid background in programming languages such as Python, Scala, or Java, along with certifications like AWS Certified Solutions Architect or Google Professional Data Engineer, significantly improves job prospects.

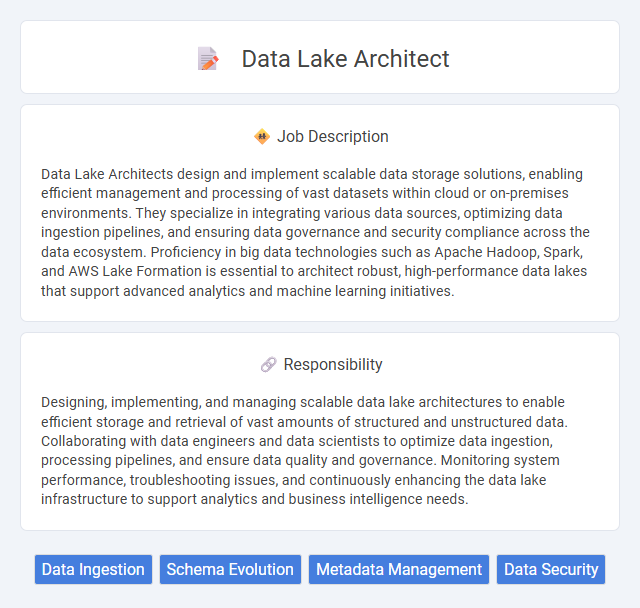

Responsibility

Designing, implementing, and managing scalable data lake architectures to enable efficient storage and retrieval of vast amounts of structured and unstructured data. Collaborating with data engineers and data scientists to optimize data ingestion, processing pipelines, and ensure data quality and governance. Monitoring system performance, troubleshooting issues, and continuously enhancing the data lake infrastructure to support analytics and business intelligence needs.

Benefit

Data Lake Architect roles likely offer significant benefits including opportunities to design and manage scalable data storage solutions that enhance data accessibility and efficiency. Professionals in this position probably gain experience with diverse data technologies and cloud platforms, increasing their marketability and expertise. Competitive salaries and career growth potential are often associated with this role due to the high demand for specialized data architecture skills.

Challenge

Data Lake Architect roles likely present challenges related to designing scalable and flexible data storage solutions that accommodate diverse data types and high-volume ingestion. They may encounter difficulties ensuring data quality and governance across decentralized sources while maintaining performance and cost-efficiency. Managing integration complexities and evolving business requirements could require continuous adaptation and innovative problem-solving.

Career Advancement

Data Lake Architect roles offer significant career advancement opportunities by specializing in designing scalable, secure data storage solutions that support enterprise-wide analytics. Mastery in cloud platforms such as AWS, Azure, or Google Cloud, combined with expertise in big data technologies like Hadoop and Spark, positions professionals for leadership roles in data engineering and architecture. Continuous skill development in data governance, metadata management, and real-time data processing enhances prospects for senior positions, including Chief Data Officer or Data Strategy Manager.

Key Terms

Data Ingestion

Data Lake Architects specialize in designing scalable data ingestion pipelines that efficiently collect, process, and store diverse data types from multiple sources in data lakes. They implement optimized ETL/ELT processes leveraging tools like Apache Kafka, AWS Glue, and Azure Data Factory to ensure high throughput and low latency ingestion. Expertise in managing schema evolution, data quality validation, and metadata management is critical to maintaining robust and reliable data ingestion workflows.

Schema Evolution

Data Lake Architects design scalable data storage solutions that support schema evolution, enabling seamless integration of changing data formats without disrupting existing workflows. They implement flexible metadata management and version control strategies to maintain data consistency and accessibility as schemas evolve over time. Expertise in tools like Apache Iceberg, Delta Lake, or Apache Hudi is essential for handling schema changes efficiently within distributed data lake environments.

Metadata Management

A Data Lake Architect specializing in Metadata Management designs and implements systems that capture, organize, and govern metadata to enhance data discoverability and usability across large-scale data lakes. Leveraging industry standards such as Apache Atlas or AWS Glue Data Catalog, they ensure metadata accuracy, lineage tracking, and compliance with data governance policies. Expertise in automating metadata ingestion and integrating with data governance frameworks is critical for maintaining data quality and supporting advanced analytics initiatives.

Data Security

A Data Lake Architect specializing in Data Security designs and implements robust frameworks to protect sensitive information across large-scale data repositories. They leverage encryption, access controls, and monitoring tools to secure unstructured and structured data, ensuring compliance with regulatory standards like GDPR and HIPAA. Expertise in data governance and threat detection helps prevent breaches while maintaining high availability and performance of data lakes.

kuljobs.com

kuljobs.com