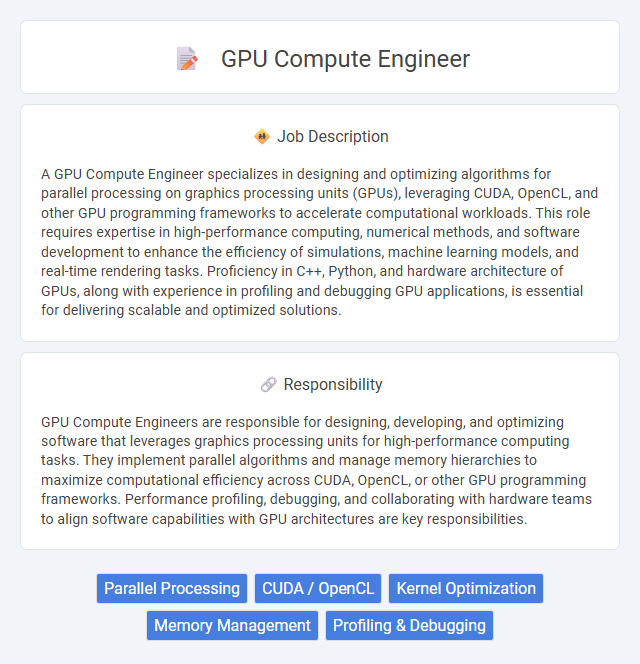

A GPU Compute Engineer specializes in designing and optimizing algorithms for parallel processing on graphics processing units (GPUs), leveraging CUDA, OpenCL, and other GPU programming frameworks to accelerate computational workloads. This role requires expertise in high-performance computing, numerical methods, and software development to enhance the efficiency of simulations, machine learning models, and real-time rendering tasks. Proficiency in C++, Python, and hardware architecture of GPUs, along with experience in profiling and debugging GPU applications, is essential for delivering scalable and optimized solutions.

Individuals with strong analytical skills and a passion for hardware acceleration are likely to thrive as GPU compute engineers. Those comfortable with programming languages like CUDA or OpenCL, and who enjoy solving complex parallel processing challenges, may find this role well-suited to their abilities. People who prefer routine tasks or lack interest in cutting-edge technology could face difficulties adapting to the fast-paced evolution typical of GPU computing environments.

Qualification

A GPU compute engineer must possess strong expertise in parallel programming languages such as CUDA and OpenCL, alongside proficiency in C++ and Python for efficient software development. In-depth knowledge of GPU architecture, performance optimization techniques, and experience with machine learning frameworks like TensorFlow or PyTorch are critical qualifications. Advanced degrees in computer science, electrical engineering, or related fields, combined with practical experience in high-performance computing (HPC) and algorithm optimization, are highly valued.

Responsibility

GPU Compute Engineers are responsible for designing, developing, and optimizing software that leverages graphics processing units for high-performance computing tasks. They implement parallel algorithms and manage memory hierarchies to maximize computational efficiency across CUDA, OpenCL, or other GPU programming frameworks. Performance profiling, debugging, and collaborating with hardware teams to align software capabilities with GPU architectures are key responsibilities.

Benefit

A GPU compute engineer likely experiences enhanced career growth due to the high demand for expertise in parallel processing and accelerated computing technologies. The role may offer competitive compensation packages, including bonuses and stock options, reflecting the specialized skill set required. Access to cutting-edge hardware and opportunities to work on innovative projects could provide ongoing professional development and job satisfaction.

Challenge

GPU compute engineer roles likely involve complex problem-solving related to optimizing parallel processing architectures for enhanced performance. The challenge probably lies in balancing computational efficiency with power consumption while addressing hardware constraints. Mastery in low-level programming and algorithm optimization is expected to navigate these technical difficulties effectively.

Career Advancement

GPU compute engineers specialize in designing and optimizing graphics processing units for high-performance computing across AI, scientific simulations, and data centers. Mastery of parallel programming languages such as CUDA and OpenCL opens pathways to leadership roles like GPU architect or research scientist. Continuous skill development in emerging technologies and active contribution to open-source projects significantly accelerates career growth in this competitive field.

Key Terms

Parallel Processing

A GPU compute engineer specializes in designing and optimizing algorithms for parallel processing architectures to maximize computational efficiency and throughput. Their role involves leveraging CUDA, OpenCL, and other parallel programming frameworks to accelerate data-intensive applications in machine learning, scientific simulations, and graphics rendering. Expertise in thread-level parallelism, memory management, and kernel optimization is essential to achieve high-performance computing on modern GPU platforms.

CUDA / OpenCL

A GPU compute engineer specializes in optimizing high-performance computing applications using CUDA and OpenCL frameworks to leverage the parallel processing power of GPUs. Expertise in developing and debugging parallel algorithms, memory management, and performance tuning is essential to maximize computational efficiency. Proficiency in CUDA kernels, OpenCL API, and GPU architecture significantly enhances the development of scalable solutions in graphics, machine learning, and scientific simulations.

Kernel Optimization

A GPU compute engineer specializing in kernel optimization improves the performance and efficiency of parallel processing tasks by fine-tuning GPU kernels for optimal resource utilization and reduced latency. This role involves analyzing memory access patterns, minimizing thread divergence, and leveraging hardware-specific features such as shared memory and warp scheduling to maximize throughput. Proficiency in CUDA, OpenCL, or similar frameworks alongside strong knowledge of GPU architecture enables engineers to implement high-performance computing solutions across scientific simulations, machine learning, and real-time graphics rendering.

Memory Management

GPU compute engineers specialize in optimizing memory management to enhance parallel processing efficiency and reduce latency. They implement advanced techniques such as shared memory utilization, memory coalescing, and minimizing global memory access to maximize throughput. Proficient knowledge of CUDA or OpenCL frameworks is essential for efficient memory allocation and bandwidth optimization in high-performance computing tasks.

Profiling & Debugging

Profiling and debugging are essential skills for a GPU compute engineer, involving the use of specialized tools like NVIDIA Nsight and AMD Radeon GPU Profiler to identify performance bottlenecks and optimize kernel execution. Mastery in analyzing memory bandwidth, occupancy, and thread divergence enables effective troubleshooting of parallel processing issues and enhances computational efficiency. Expertise in debugging techniques, including shader debugging and race condition detection, ensures robust GPU-accelerated application development and maintenance.

kuljobs.com

kuljobs.com