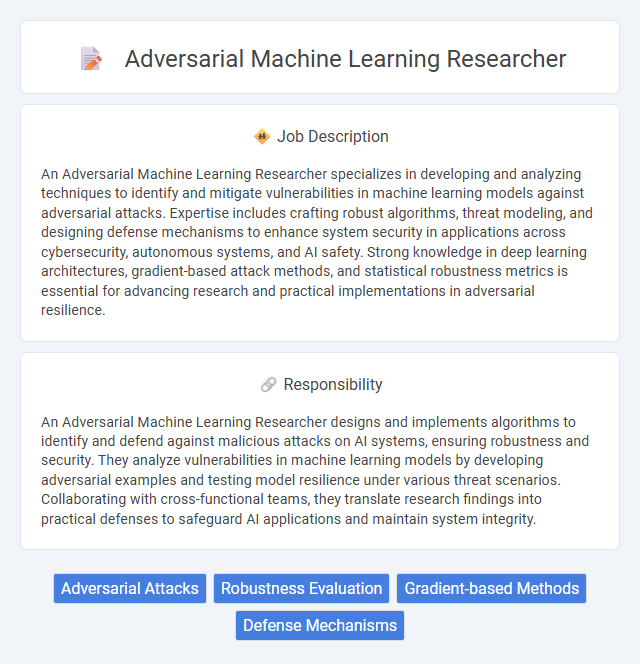

An Adversarial Machine Learning Researcher specializes in developing and analyzing techniques to identify and mitigate vulnerabilities in machine learning models against adversarial attacks. Expertise includes crafting robust algorithms, threat modeling, and designing defense mechanisms to enhance system security in applications across cybersecurity, autonomous systems, and AI safety. Strong knowledge in deep learning architectures, gradient-based attack methods, and statistical robustness metrics is essential for advancing research and practical implementations in adversarial resilience.

Individuals with strong analytical skills and a deep understanding of cybersecurity principles are likely well-suited for an Adversarial Machine Learning Researcher role. Those who are comfortable working in fast-evolving environments, tackling complex problems related to AI vulnerabilities, will probably thrive. Candidates lacking a solid foundation in both machine learning algorithms and threat modeling might find the position challenging.

Qualification

An Adversarial Machine Learning Researcher must possess deep expertise in cybersecurity, machine learning algorithms, and statistical analysis, with advanced degrees such as a Master's or PhD in Computer Science, Artificial Intelligence, or related fields. Proficiency in programming languages like Python, TensorFlow, and PyTorch is essential, alongside experience with adversarial attack and defense techniques, threat modeling, and data poisoning methods. Strong analytical skills, a solid understanding of neural networks and reinforcement learning, and published research in top-tier conferences or journals significantly enhance a candidate's qualifications.

Responsibility

An Adversarial Machine Learning Researcher designs and implements algorithms to identify and defend against malicious attacks on AI systems, ensuring robustness and security. They analyze vulnerabilities in machine learning models by developing adversarial examples and testing model resilience under various threat scenarios. Collaborating with cross-functional teams, they translate research findings into practical defenses to safeguard AI applications and maintain system integrity.

Benefit

Adversarial Machine Learning Researchers likely gain access to cutting-edge tools and datasets that enhance their ability to identify vulnerabilities in AI systems. This role may offer opportunities for collaboration with top experts, increasing the probability of impactful contributions to cybersecurity. Employment in this field potentially includes competitive salaries and professional growth in a rapidly evolving domain.

Challenge

Adversarial Machine Learning Researchers likely face the challenge of developing robust algorithms that can withstand sophisticated attacks aimed at exploiting model vulnerabilities. The complexity of predicting and defending against constantly evolving adversarial tactics may require advanced knowledge in both machine learning and cybersecurity domains. This challenge probably drives ongoing innovation and necessitates continuous adaptation to emerging threat landscapes.

Career Advancement

Advancing a career as an Adversarial Machine Learning Researcher involves deepening expertise in designing robust AI models that resist adversarial attacks, coupled with publishing findings in leading AI and cybersecurity journals. Gaining proficiency in cutting-edge techniques such as generative adversarial networks (GANs) and threat modeling enhances job prospects in top-tier tech companies and research institutions. Leadership roles and collaboration with interdisciplinary teams provide pathways to influence AI security policies and develop industry standards, accelerating professional growth.

Key Terms

Adversarial Attacks

Adversarial Machine Learning Researchers specialize in developing and analyzing adversarial attacks that exploit vulnerabilities in AI models, particularly deep neural networks. Their work involves creating sophisticated perturbations designed to deceive classifiers and improve the robustness of machine learning systems. Expertise in crafting white-box and black-box attacks aids in advancing defense strategies against evolving threats in AI security.

Robustness Evaluation

An Adversarial Machine Learning Researcher specializing in Robustness Evaluation focuses on designing and implementing methods to assess the resilience of machine learning models against adversarial attacks. This role involves scrutinizing model vulnerabilities through rigorous testing with crafted perturbations to ensure reliability in real-world applications. Expertise in threat modeling, attack simulation, and defensive strategy evaluation is essential to advance the security and robustness of AI systems.

Gradient-based Methods

Gradient-based methods are central to adversarial machine learning research, enabling the development of robust models by generating perturbations that reveal vulnerabilities in neural networks. Researchers use techniques like FGSM (Fast Gradient Sign Method) and iterative gradient attacks to craft adversarial examples that challenge model security and improve defense mechanisms. This role demands expertise in optimization algorithms, deep learning architectures, and the ability to analyze gradient information to enhance model robustness against sophisticated attacks.

Defense Mechanisms

Adversarial Machine Learning researchers specialize in developing advanced defense mechanisms to protect AI models from malicious attacks and data manipulation. Techniques such as adversarial training, robust optimization, and anomaly detection are employed to enhance system resilience against evasion, poisoning, and model inversion threats. Continuous innovation in defense algorithms ensures the reliability and security of machine learning applications across sensitive domains like cybersecurity, autonomous systems, and financial services.

kuljobs.com

kuljobs.com