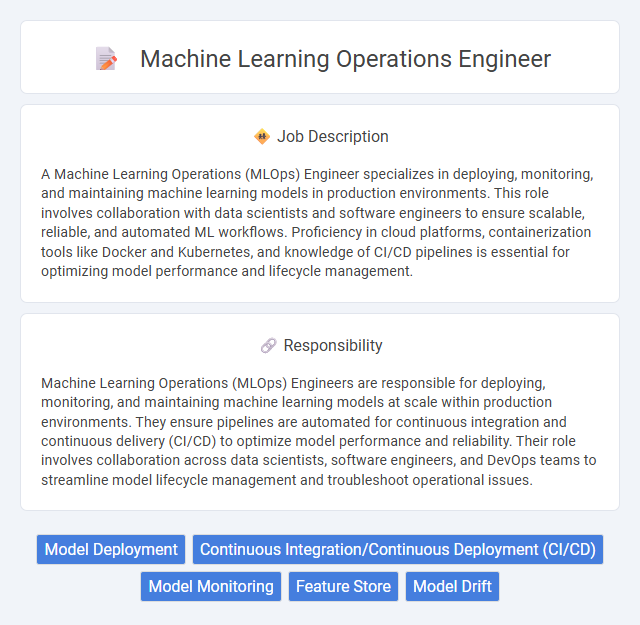

A Machine Learning Operations (MLOps) Engineer specializes in deploying, monitoring, and maintaining machine learning models in production environments. This role involves collaboration with data scientists and software engineers to ensure scalable, reliable, and automated ML workflows. Proficiency in cloud platforms, containerization tools like Docker and Kubernetes, and knowledge of CI/CD pipelines is essential for optimizing model performance and lifecycle management.

Individuals with strong analytical skills and a passion for automating machine learning workflows are likely to thrive as machine learning operations engineers. Those who enjoy troubleshooting complex systems and collaborating across data science and IT teams will probably find this role suitable. However, candidates who prefer routine tasks or lack a background in both software engineering and data science might struggle to excel in this position.

Qualification

A Machine Learning Operations (MLOps) Engineer requires proficiency in Python, Docker, Kubernetes, and cloud platforms such as AWS, Azure, or Google Cloud for deploying scalable ML models. Expertise in CI/CD pipelines, model monitoring, and data versioning tools like MLflow and DVC is essential to maintain model performance and reliability. Strong skills in automation, software engineering, and collaboration with data scientists and DevOps teams are critical for successful operationalization of machine learning workflows.

Responsibility

Machine Learning Operations (MLOps) Engineers are responsible for deploying, monitoring, and maintaining machine learning models at scale within production environments. They ensure pipelines are automated for continuous integration and continuous delivery (CI/CD) to optimize model performance and reliability. Their role involves collaboration across data scientists, software engineers, and DevOps teams to streamline model lifecycle management and troubleshoot operational issues.

Benefit

A Machine Learning Operations (MLOps) engineer likely contributes to streamlining the deployment and monitoring of machine learning models, enhancing model reliability and scalability. This role probably improves collaboration between data scientists and IT teams, resulting in faster delivery of AI-driven solutions. Employers might see reduced operational costs and increased productivity due to more efficient model lifecycle management.

Challenge

Machine learning operations engineers likely face significant challenges in integrating complex ML models seamlessly into production environments while ensuring scalability and reliability. Managing continuous deployment pipelines and monitoring model performance in real-time may demand advanced problem-solving skills and cross-functional collaboration. Handling data quality issues and adapting to rapidly evolving technologies probably adds layers of complexity to their daily tasks.

Career Advancement

Machine learning operations engineers drive the deployment and scalability of AI models, ensuring seamless integration from development to production environments. Mastery in MLOps tools such as Kubernetes, Docker, and MLflow enhances career growth by optimizing model lifecycle management and operational efficiency. Progression opportunities include senior engineer roles, AI project lead positions, and potential transition into machine learning architect or data science management pathways.

Key Terms

Model Deployment

A Machine Learning Operations Engineer specializes in deploying machine learning models into production environments, ensuring scalability, reliability, and maintainability. They manage continuous integration and continuous deployment (CI/CD) pipelines, automate model versioning, and monitor model performance to detect data drift or degradation. Expertise in cloud platforms, containerization tools like Docker and Kubernetes, and ML frameworks is crucial for optimizing deployment workflows and reducing latency.

Continuous Integration/Continuous Deployment (CI/CD)

A Machine Learning Operations (MLOps) engineer specializes in implementing Continuous Integration/Continuous Deployment (CI/CD) pipelines tailored for machine learning models to ensure seamless updates and scalable deployment. They optimize automation workflows for version control, testing, and monitoring of models in production environments using tools like Jenkins, GitLab CI, or Azure DevOps. Mastery in containerization (Docker, Kubernetes) and infrastructure as code (Terraform, Ansible) enhances reliable model delivery and operational efficiency across cloud platforms.

Model Monitoring

Machine Learning Operations Engineers specialize in model monitoring to ensure continuous performance and accuracy of deployed machine learning models. They implement automated tracking systems for real-time detection of data drift, model degradation, and anomalous predictions using tools like Prometheus, Grafana, and MLflow. These engineers analyze monitoring metrics to trigger retraining or alerting workflows, maintaining model reliability and compliance with SLAs in production environments.

Feature Store

Machine Learning Operations (MLOps) Engineers specializing in Feature Stores design and maintain scalable infrastructure to manage, version, and serve features for machine learning models efficiently. They ensure consistency between training and serving environments by integrating feature pipelines with real-time and batch processing systems. Expertise in platforms such as Feast, Tecton, or Hopsworks is critical for optimizing feature engineering workflows and accelerating model deployment cycles.

Model Drift

Machine learning operations engineers monitor model drift to ensure predictive accuracy in dynamic environments, employing automated retraining pipelines and performance tracking metrics like data distribution shifts and accuracy degradation. They utilize tools such as MLflow, Kubeflow, or Seldon Core to detect deviations in model behavior and implement alerts for early intervention. Proactive model management mitigates risks associated with data drift, concept drift, and schema changes, preserving model reliability in production.

kuljobs.com

kuljobs.com